Exclusive Update: Does Google Cancels Support for Robots.txt Noindex?

Recently, Google had officially declared that GoogleBot will stop comply with a Robots.txt directive for the purpose of indexing. Distributors depending on the robots.txt noindex directive are valid up to September 1, 2019 to drop it and start utilizing other options.

It’s Unauthorized: Robots.txt Noindex

As well all know, robots.txt is very easy to use, yet inconceivably powerful: by indicating a user-agent and protocols for it, website admins can easily control over what crawlers may get to. It doesn’t make a difference if it’s a single URL, a specific file-type, or an entire website–robots.txt is much capable of working for each and every URL source.

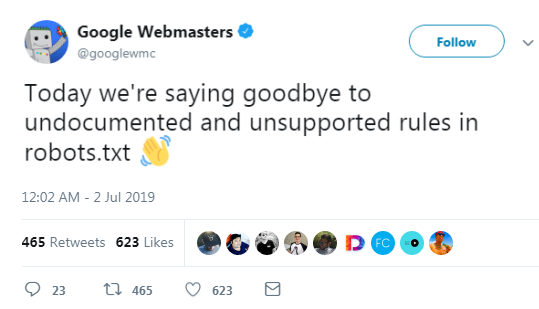

On 2 July 2019, Google officially tweeted:

Why Google has decided to change it?

Google has been hoping to make a few changes for years. The reason behind making robots.txt unofficial directive is to improve Google services so that the genuinely supported techniques for those directives going ahead.

“Google is now dropping the usage of precarious web drafts such as noindex, crawl-delay, and nofollow. As these protocols were never recorded by Google, generally, their utilization in Google crawler is very less,” Google Said. “These errors hurt sites’ visibility in Google’s search engine results in manners we don’t determine webmasters proposed.”

How You Can Control Web Crawling?

Follow below points as it will let you control your website indexing on Google search engine:

-

- Noindex option in robots meta tags: Give support in HTTP and HTML response headers and. This noindex directive is the best method to remove one or more URLs from getting indexed while crawling is active.

-

- 404 and 410 HTTP status codes: Both codes helps to know that the specific URL page is not existing. Google index those URLs once get processed for the crawling step.

-

- Securing Password: Hiding any webpage using login password can remove it from getting indexed by Googlebot.

-

- Use Disallow option in robots.txt: Google search engines indexes only those pages that are allowing content, so if you have blocked any page from getting crawled, then content will also not get indexed. Google is trying to make such pages less obvious later on.

-

- Search Console Remove URL tool: If you want to remove any URL on a temporary basis from Google search engine results, then this tool is very effective for you!

Let’s see what would be the other new changes will be done by Google to aim for the better protocols usage in future! To know more about the new updates, read Google Twitter daily posts.